Broadly capable AI systems are poised to become an engine of economic growth and scientific progress. The fundamental insight behind this engine is “the bitter lesson”: AI methods that can independently learn by better taking advantage of massive amounts of computation (or “compute”) vastly outcompete methods that are hand-crafted to encode human knowledge.

Nowhere is this more evident than in today’s large language models. Despite the massive capability gains in state-of-the-art language models over the last six years, the key difference between today’s best-performing models and GPT-1 (released in 2018) is scale. The foundational technical architecture remains largely the same, but the amount of computation used in training has increased by a factor of one million. More broadly, the amount of computation used to train the most capable AI models has doubled about every six months from 2010 to today.

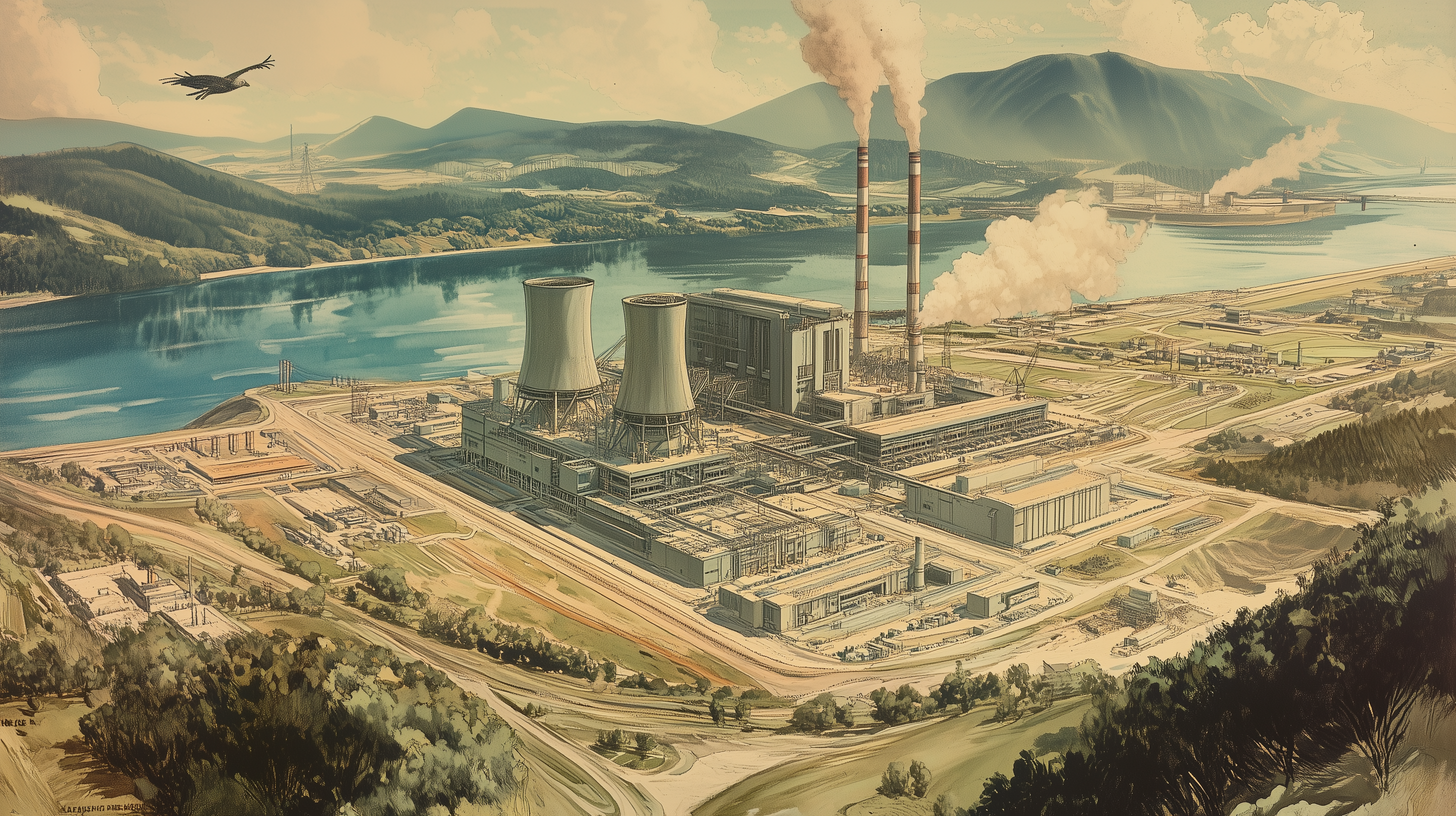

The physical manifestations of the bitter lesson are AI data centers: industrial facilities requiring tens of thousands of specialized computer chips, running 24 hours a day, and requiring enormous amounts of electricity. All told, a single AI data center can consume tens of megawatts of power, akin to the consumption of a city with 100,000 inhabitants. Such data centers are needed both to train new AI models and to deploy them at scale in applications like ChatGPT.

Given these trends, we are likely on the cusp of a computing infrastructure build-out like no other. Technological, security, and feasibility challenges will follow. We will need to efficiently network millions of powerful chips together, design infrastructure to protect AI models from sophisticated cyberattacks, and generate massive amounts of energy necessary to run the centers.

These technical challenges create policy challenges: Are we creating conditions such that data centers for training future generations of the most powerful models are built here rather than overseas? Are incentives, regulations, and R&D support in place to ensure private actors develop technology with appropriate security? Is the supply of clean energy increasing at the pace necessary to fuel the grand projects of the next decade: the electrification of transportation, the manufacturing renaissance, and the AI infrastructure buildout?

We propose three goals for the AI infrastructure build-out:

1. We should build AI computing infrastructure in the United States.

2. We should unleash next-generation energy technologies to make it happen.

3. We should accelerate the development of security technologies to protect the AI intellectual property of the future.

Over the coming weeks, IFP will propose a plan for achieving these goals.

Part I: How to Build an AI Data Center

In the first piece of the series, Brian Potter examines the technical challenges of building AI data centers. The rise of the internet and its digital infrastructure has required the construction of vast amounts of physical infrastructure to support it. Modern data centers demand as much power as a small city, and campuses of multiple data centers can use as much power as a large nuclear reactor. The rise of AI will accelerate this trend, requiring even more data centers that are increasingly power-intensive.

Part II: How to Build the Future of AI in the United States

In the second piece, Tim Fist and Arnab Datta examine the key trends influencing the shape of AI development, and what they will mean for building the data centers of the future. Given trends in cluster sizes and power demands, AI firms are increasingly looking abroad to fulfill their energy needs. The key technological barrier to building 5 GW clusters in the U.S. within 5 years is building new power capacity. The most viable path to doing this is by rapidly deploying “behind-the-meter” generation. But massive investments in new power capacity for AI data centers will count for little if the next generation of AI data centers suffers from the same security gaps as those that exist today.

Part III: A Policy Playbook for 5 GW Clusters in 5 Years

In the last piece in the series, Tim Fist and Arnab Datta analyze the policy levers available to the United States to ensure that future generations of data centers are both built in America, and good for America.

Although the US has been the innovation leader in artificial intelligence thus far, that status is not guaranteed. Across the value chain, other countries are investing heavily to capture a portion of the industry, whether it’s China investing in manufacturing new AI chips, or Saudi and Emirati sovereign wealth funds investing in new data centers.

We can hardly expect to control 100% of the AI market for years to come, nor should we. But only thoughtful, concerted policy action can ensure the future of AI is built responsibly in the United States. We lay out a roadmap for maintaining American technological leadership.